In today’s ever-growing society, there are areas of the world where resources are thin and not as readily available to those who need them most. With the help of artificial intelligence technologies, resources and knowledge can be distributed and accessed in novel ways. The project illustrates one such use case, of the intelligent edge, where AI can help inform healthcare professionals and potentially patients.

The Garage’s Vancouver internship cohort has been hard at work on artificial-intelligence powered projects that further productivity and showcase leading-edge possibilities which machine learning can turn into reality. Mobile Chest X-Ray Analysis is one such project that demonstrates AI-powered possibilities, built by an intern team of five developers, one designer, and one program manager.

The Garage intern team (l to r): Robert Lee, Megan Roach, Michaela Tsumura, Jacky Lui, Noah Tajwar, Brendan Kellam not pictured: Charmaine Lee

The 16-week internship program starts by matching up interns to existing product teams within Microsoft who want to jumpstart and incubate ideas using rapid design and agile development. The interns bring valuable perspective and fresh energy, getting to work in their own team to create prototype products, rather than embedding with an existing team. The sponsoring group for this project is the Cloud AI team, who challenged the interns to leverage machine-learning in new ways to infuse AI into intelligent applications and show how a trained model can be used to improve professional workflows. The Cloud AI team had already created a solid foundation for the interns to build from – a prototype and instructions on how to train the Machine Learning model. The Xamarin research prototype that the interns created brings that model’s capabilities to mobile platforms such as tablets, iOS and Android™. It can also function completely offline. The project is open source and invites researchers and developers to contribute, grab the source code, and build their own prototype app.

“I learn from the thousands of patients I see each year, but this can learn from infinite [patients]” – Dr. Kevin Afra, Infectious Diseases Specialist, Fraser Health Authority

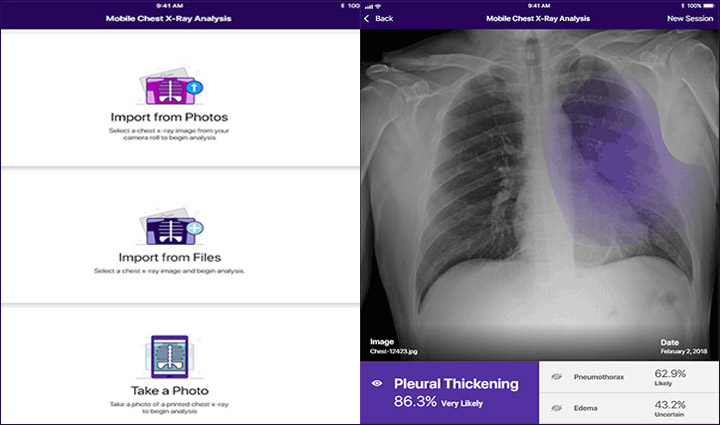

Though this experimental research project ships with an empty machine-learning model, users can follow the ML Blog instructions to inject it with the pre-trained chest x-ray model. Once compiled, the research app can be used to analyze and interpret chest x-ray images to provide preliminary prediction of the likely chest condition, based on 14 different chest conditions from the National Institutes of Health publicly available datasets.

Building the experiment

It’s important to note this is a proof-of-concept. The sample code available is intended for research and development use only and is not intended for use in clinical diagnosis or clinical decision-making or for any other clinical use. Though the performance of the sample code for clinical use has not been established, the intern team did collaborate closely with healthcare professionals to get valuable user feedback on the design. The team conducted user research sessions with professionals from institutions such as the Fraser Health Authority and Critical Care in Surrey, B.C. Research was critical to validate the team’s ideas, features, and what direction to take the project.“We needed end user input. We would show them our ideas, get feedback, then iterate on the design on a weekly basis,” Charmaine Lee, program manager intern on the project emphasized the importance of user feedback and how it shaped the end result.

The project team also wanted to show how machine-learning models could be consumed through Xamarin applications, enabling developers to deploy their own models into other intelligent app solutions. “We want people to contribute to the repository. The average software developer can see this and get the source code and experiment with it – you don’t need to dive into any specialized field or specialized skills.” shares Jacky Lui, software engineer.

This research project has the following features and functionalities:

- Offline ML model inference

- Integration with native photo, camera, and cloud storage pickers

- Image cropping prior to analysis

- Image classification and likelihood prediction for 14 different chest conditions

- Class Activation Maps (CAMs) for each of the 14 conditions

- Integration with native sharing interfaces

- Sample telemetry collection

The project was built with the following tech stack:

- Xamarin.Android and Xamarin.iOS SDKs : app UI and codebase

- Visual Studio Team Services: repository hosting, continuous integration, and work item tracking

- Visual Studio App Center: automated builds, crash reports, telemetry, and distribution

- CoreML and TensorFlow Mobile: ML model integration on iOS and Android, respectively

- Various NuGet packages: cropping, image manipulation, etc

Xamarin.Android and Xamarin.iOS are mobile app software development kits that enable developers to write applications for iOS, Android, and UWP in C#, using native user interface elements and native APIs. Applications built with the Xamarin SDKs are also compiled for native performance, enabling applications to leverage platform-specific hardware capabilities. [https://www.xamarin.com/platform]

By using the Xamarin.Android and Xamarin.iOS SDKs, the interns could share business logic and other shareable code across projects. For example, much of the ML model post-processing and image manipulation code is shared across Android and iOS. The Xamarin SDKs also expose platform specific APIs and allow UI code to be written separately, so the team could take full advantage of features such as TensorFlow Mobile and CoreML and integrate with the native file picker and camera. NuGet packages were used to implement cropping and image overlays.

Using App Center

Microsoft’s Visual Studio App Center is a collection of services that enables developers to manage and ship their applications on any platform. The project team used App Center to automatically build, distribute, and collect analytics and crash reports.

App Center allowed the team to easily distribute their experimental app to beta testers and users via email distribution lists. The project was built and distributed on every pull request, so testers always had the latest features. The team was able to receive fast feedback from their testers, enabling them to iterate quickly.

During user testing, crash reports are invaluable for developers. App Center automatically generates crash logs for every application crash event. Detailed information such as stack traces and device info provide insight into the health of the application. During the internship, interns had bi-weekly ‘bug-bashes’, where the other Garage intern teams would test and break each others’ projects. This was also a great opportunity to check out the hard work and progress of the other Garage intern teams. Using App Center Crash Reports made it easier to discover and address critical bugs that arose.

Zero to AI Hero

The Xamarin SDKs also allow trained ML models to be quickly deployed across multiple platforms. The team converted the trained Keras model provided by their sponsors into `.mlmodel` (CoreML format) and `.pb` (TensorFlow format) for the application to consume. Xamarin.iOS has CoreML built-in, so loading the model is as simple as:

“`CoreMLImageModel mlModel = new CoreMLImageModel(modelName, inputFeatureName, outputFeatureName);“`

Running the model on an input is done by:

“`float[] modelOutput = mlModel.Predict(input);“`

where `input` is of type `MLMultiArray`.

While Xamarin.Android does not have TensorFlow Mobile built-in, Xamarin can generate binding libraries, which the team used to generate C# bindings to the TensorFlow `.aar` archive. After the TensorFlowAndroid binding library was created, the model is loaded by:

“`TensorFlowInferenceInterface tfInterface = new TensorFlowInferenceInterface(assets, modelAssetPath);“`

where `assets` is an `AssetManager` and `modelAssetPath` is a `string` for the path to the model file.

Running the model on an input is done by:

“`tfInterface.Feed(inputName, input, batchSize, width, height, channels);“`

This proof of concept is not limited to chest x-rays, either. Any CoreML and TensorFlow model can be consumed through Xamarin applications, enabling developers to deploy their own models to empower their solutions on the intelligent edge.

Grab the source code for Mobile Chest X-Ray Analysis and start experimenting with your own prototype.

Interested in Azure ML? Check out the Azure Machine Learning Studio site.

Learn more about The Garage Internship Program in Vancouver.

Meet the Garage intern team and watch the app in action on the Xamarin Show.

Android is a trademark of Google LLC. TensorFlow is a trademark of Google Inc. IOS is a trademark or registered trademark of Cisco in the U.S. and other countries and is used under license.